Episode 125: Silicon Valley vs. Science Fiction: ChatGPT

Cover image from Iain M. Banks’ Culture novel Look to Windward, by Mark Salwowski.

This is the first in a series of monthly episodes we’ll be doing about how Silicon Valley appropriates and misinterprets science fiction. Silicon Valley executives claim to be inspired by SF, but mostly they use it retroactively to justify their products, often missing the more complicated, nuanced ideas embedded in the original stories. Today we’re going to tackle the hype cycle around A.I., which borrows liberally from the post-scarcity, post-human visions of Iain M. Banks in his Culture novels. It’s time for … the Culture vs. ChatGPT!

Notes, Citations & Etc.

Ezra Klein’s 2021 interview with Sam Altman

Our previous discussion of Asimov’s short story “The Last Question,” in episode 119.

Emily Bender discusses Sam Altman’s rhetorical slippage between what’s really happening and what could happen with AI.

Noah Smith describes going to a party with a bunch of AI researchers.

Financial Times charts the meteoric rise in investment in A.I. companies in the past year, when capital investment rose 425 percent to $2.1 billion.

Timnit Gebru et. al.’s paper on stochastic parrots

Ted Chiang’s article about how ChatGPT is a blurry JPG

Sam Altman’s blog post in 2015 about intelligence

FTC guidelines to A.I. companies telling them to stop the false advertising and hype for their products

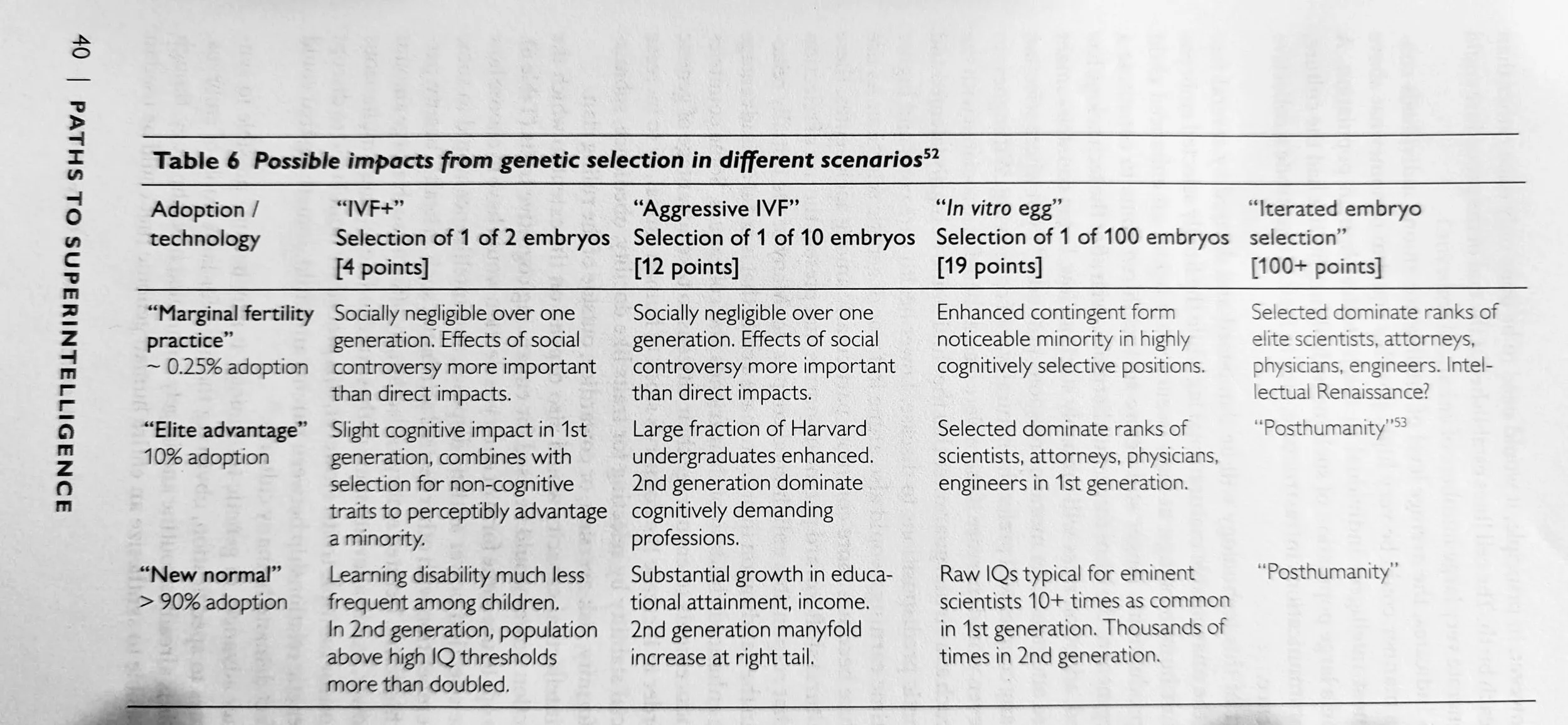

Nick Bostrom, Superintelligence

Eugenics chart from Superintelligence, p. 40

Charles Murray and Richard Herrnstein, The Bell Curve

OpenAI’s statement of purpose in “Planning for AGI and Beyond”

“Nick Bostrom is the Donald Trump of AI”

Oren Eztione on the problem with AI’s existential threat model and Pascal’s Wager

Time Magazine’s investigative report on Kenyan workers who are moderating ChatGPT

New York Times report on how Bing’s ChatGPT became malevolent and weird

Time Magazine report on how Bing’s ChatGPT has started to threaten users